Abstract

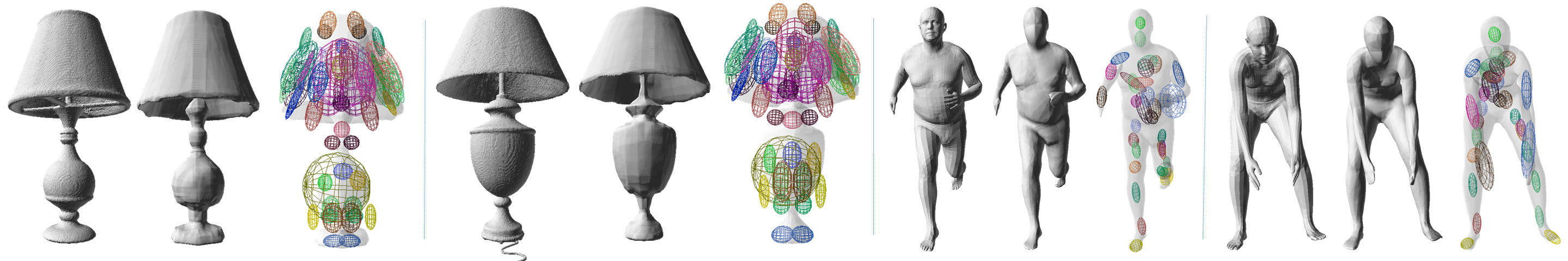

The goal of this project is to learn a 3D shape representation that enables accurate surface reconstruction, compact storage, efficient computation, consistency for similar shapes, generalization across diverse shape categories, and inference from depth camera observations. Towards this end, we introduce Local Deep Implicit Functions (LDIF), a 3D shape representation that decomposes space into a structured set of learned implicit functions. We provide networks that infer the space decomposition and local deep implicit functions from a 3D mesh or posed depth image. During experiments, we find that it provides 10.3 points higher surface reconstruction accuracy (F-Score) than the state-of-the-art (OccNet), while requiring fewer than 1% of the network parameters. Experiments on posed depth image completion and generalization to unseen classes show 15.8 and 17.8 point improvements over the state-of-the-art, while producing a structured 3D representation for each input with consistency across diverse shape collections.

Video

Paper

Code

Code is now available for both LDIF and SIF on github.

Bibtex

@inproceedings{genova2020local,

title={Local Deep Implicit Functions for 3D Shape},

author={Genova, Kyle and Cole, Forrester and Sud, Avneesh and

Sarna, Aaron and Funkhouser, Thomas},

booktitle={Proceedings of the IEEE/CVF Conference on

Computer Vision and Pattern Recognition},

pages={4857--4866},

year={2020}

}